Industries

Industries we work with

AdTech MarTech Linear and OTT TV Retail Media Services eCommerce Development AdTech Development Company

Reliable and productive AdTech solutions ensure reaching business goals faster and more efficiently for AdTech companies, digital agencies, publishers, and brands.

Learn More DSP

Partner with Geomotiv to build, optimize, or upgrade your media buying tech.

SSP

Geomotiv builds custom SSPs or upgrades existing ones using AdTech expertise.

Ad Exchange

Reaching ambitious goals of the demand and supply sides is easier with a team of AdTech gurus.

Ad Server

Get a smooth Ad Server launch with our expert AdTech developers.

DMP

Need a custom DMP? Our developers bring deep expertise across ad channels and tech.

CDP

Leverage our AdTech team to use a CDP and unlock real audience insights for maximum efficiency.

Header Bidding

Build or enhance header bidding with our expert team to create strong buyer-seller connections.

OpenRTB Integration

Build or integrate OpenRTB with our full-service AdTech development team.

DOOH Advertising Platform

We build and scale digital out-of-home advertising platforms for transit, retail, roadside, and in-store formats.

Self-Serve Ad Platform

Launch a self-serve ad platform to control ad spend, secure margins, and scale campaigns across every digital channel.

MarTech

Count on Geomotiv – an experienced software development partner crafting and improving MarTech business solutions since 2010.

Learn More Linear and OTT TV

Geomotiv team helps to create efficient solutions for advertising campaigns on all screens.

Learn More Retail Media Services

Our retail media services help brands and retailers boost revenue through data-driven advertising. We specialize in programmatic ads, audience targeting, and AI-powered analytics, enabling personalized, high-impact campaigns. With expertise in retail media networks, ad monetization, and omnichannel strategies, Geomotiv maximizes customer engagement and sales.

Learn More eCommerce Software Development

Our eCommerce software development company provides vast services for any demand.

Learn More Magento eCommerce Development

Our experts have the required technical and business competence to deliver a comprehensive suite of Magento development services.

eCommerce Store Development

Geomotiv provides custom eCommerce store development services tailored to your requirements.

eCommerce Marketplace Development

Geomotiv is dedicated to bringing custom eCommerce marketplace solutions to life and helping them grow and prosper.

Event Management App Development

Our team empowers event organizers worldwide with high-profile booking solutions that utilize the right features and tools and target the right audiences.

Coupon and Deals App Development

Let us help you develop your own deals and coupon platform to achieve sales objectives with precision, increase conversions, and track the outcomes of marketing activities.

Auctions and Bidding Platforms Development

If you want to create an auction site, turn to Geomotiv. With our experience in online auction platform development services, you will get custom auction apps to suit all your needs.

Services

Explore Our Services

AdTech Team AdTech Solutions AdTech Consulting Services Dedicated Development Team Big Data and Analytics ML and AI Development Services High Load Systems Development Software Development and Modernization

AdTech Team

Our experienced team of developers, fresh off successful projects with Pluto TV and Paramount, is ready to elevate your next Adtech or Video Streaming solution.

Learn More AdTech Solutions

Geomotiv partners with leading companies across streaming, media, gaming, audio, CTV, retail, and RMNs to build custom AdTech solutions aligned with their business goals.

Learn More AdTech Consulting Services

Partner with Geomotiv’s AdTech consultants to build scalable platforms, streamline programmatic delivery, and make the best technical decisions.

Learn More Dedicated Development Team

Geomotiv lets you access an ideal dedicated development team whenever you need to complete your in-house team or create a standalone R&D department within your company.

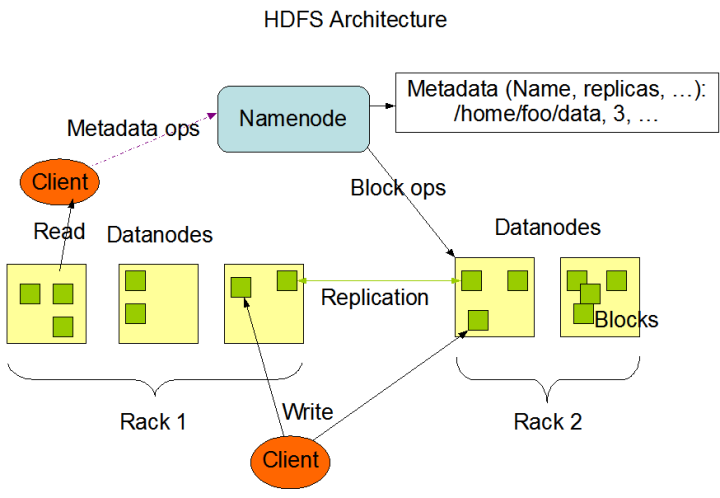

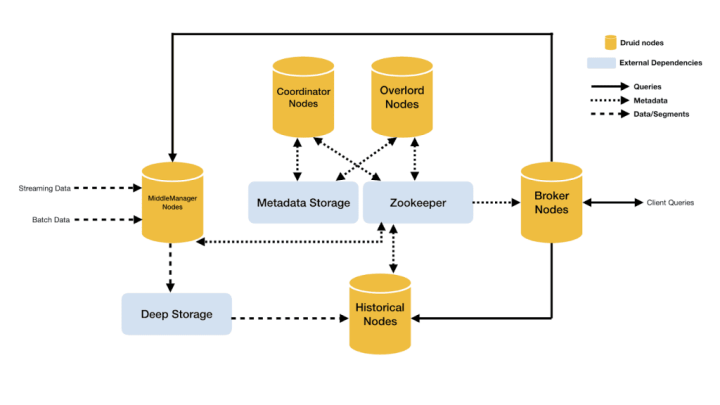

Learn More Big Data and Analytics

From a small app to a comprehensive platform-level project, Geomotiv can develop and implement custom software solutions that involve extensive Big Data usage, storage, management, and processing.

Learn More ML and AI Development Services

We will help you to drive your business growth with innovative AI and ML services by automating routine processes, expanding your app features, and increasing the accuracy of business predictions.

Learn More High Load Systems Development

Need exceptional expertise to develop a solid architectural foundation with excellent high-load capabilities?

Learn More Software Development and Modernization

Custom software solutions and legacy system upgrades to drive efficiency and innovation.

Enterprise Software Development

As an enterprise software development company, we know how to transform your business with custom enterprise software that enables organizational agility and scale of business opportunities.

Legacy App Modernization

Promote faster digital transformation journeys and build the foundation for future innovation with Geomotiv’s legacy application modernization services.

Cloud Software Development

With our expertise in cloud software development, we’ll help you to build innovative cloud-based solutions, ensure seamless migration to the cloud, and create a highly reliable cloud ecosystem.

Case Studies

Company

About Us

Geomotiv is a natural choice for companies willing to seamlessly connect with their development partners and foster transparent win-win cooperation.

Learn More Our Team

Successful teamwork starts with individuals. We collaborate to reach common goals and together

we achieve more to provide best solutions.

Learn More Career

Our team is our greatest value. Our aim is to disclose your potential and express your creativity!

Learn More Contacts

Contact us to discuss your goals, receive expert guidance, or explore partnership opportunities. We will get back to you shortly.

Learn More Blog